Data Curation

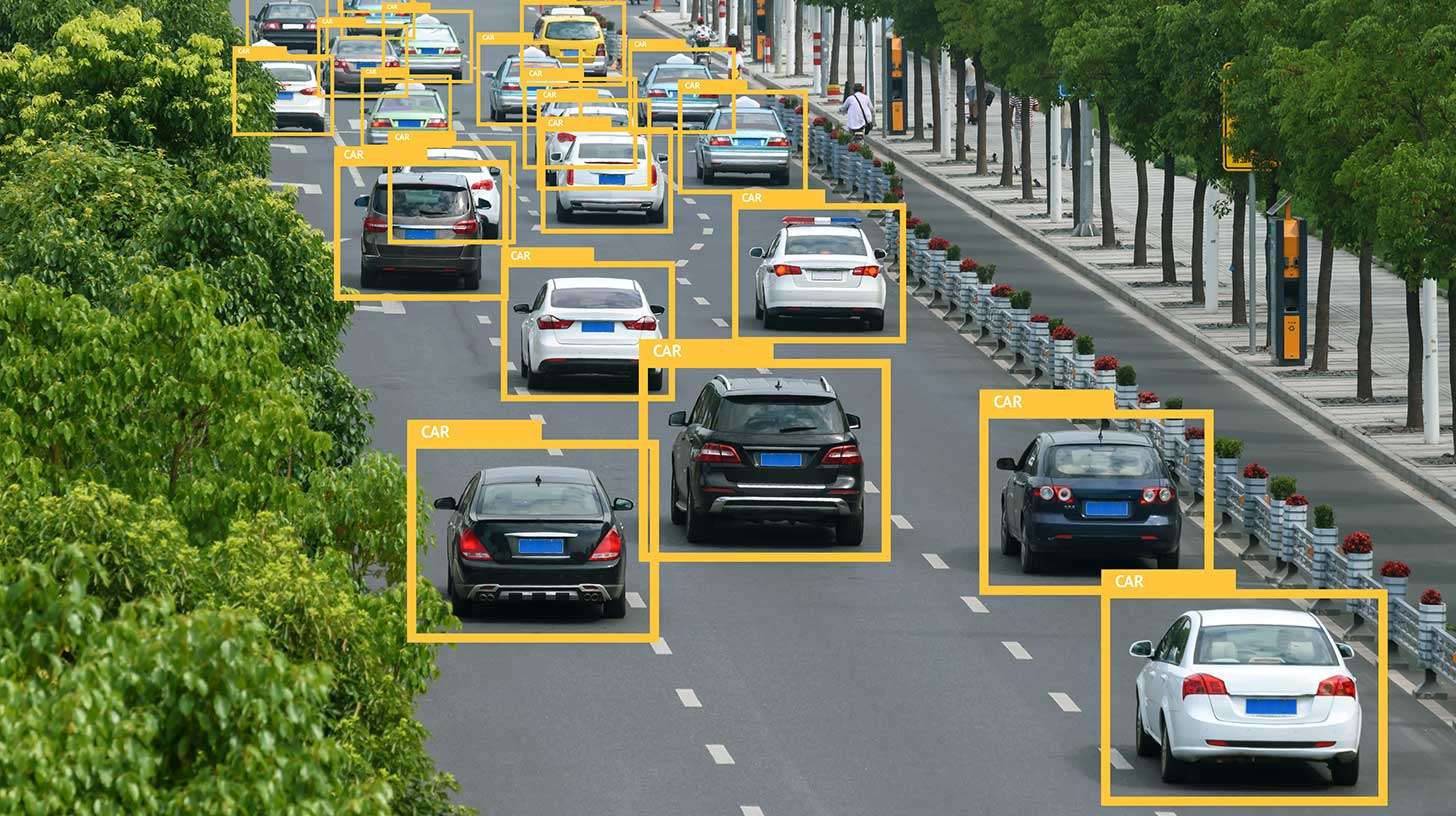

Create a diverse, balanced, and representative dataset of your scenarios to optimize your models’ performance while reducing annotation costs.

Home > data curation

Data Reliability and Quality

Rigorous data curation eliminates errors, duplicates, and inconsistencies, ensuring your AI models are trained on reliable and representative data.

This reduces bias, improves prediction accuracy, and maximizes the robustness of results.

Consistency and Interoperability

Structuring and harmonizing formats, ontologies, and labeling schemas ensure your data is consistent and easily usable across different systems, pipelines, and AI platforms.

Your analyses become smoother, and projects can be deployed faster.

Analytical Value and Enhanced Performance

Enriching data with metadata, derived attributes, and contextual information increases its analytical depth.

Your AI models detect complex patterns and generate precise, actionable insights.

EXpertise

Reliable, ready-to-use data

Our teams follow a proven methodology to ensure the reliability of your data: multi-source normalization; automated pipeline combined with human oversight; full versioning and traceability; balanced and consistent training datasets.

We analyze your data to identify errors, duplicates, outliers, or inconsistencies between sources.

Once detected, we correct or remove problematic elements to obtain a clean, reliable, and consistent corpus. This step reduces noise, limits biases, and improves the stability of trained models.

We standardize your data by harmonizing file formats, structures, ontologies, nomenclatures, and labeling schemas.

This normalization ensures internal consistency of the dataset and facilitates processing by your AI pipelines. It also allows the unification of multiple heterogeneous batches into a consistent corpus

We structure and prepare your datasets by selecting relevant data, balancing classes, and organizing datasets according to use-case scenarios.

This step helps reduce bias and enhances the robustness and generalization capabilities of your models.

Ensure the consistency and compatibility of datasets from different sources to produce a single, clean, and usable dataset.

We merge and harmonize data from multiple sources, each of which may have different formats, quality, or structures.

Using precise normalization rules, we create a consistent, coherent corpus aligned with your objectives, ensuring greater model stability and reliable comparability.

We segment your data based on relevant criteria, filter out unnecessary or redundant elements, and statistically rebalance under- or over-represented classes.

This process improves the representativeness of use cases, reduces learning biases, and optimizes the generalization capability of your models.

We ensure a continuous quality process that includes regular checks, audits, corrections, and progressive enhancements.

With versioning, every change to the dataset is tracked, documented, and reproducible.

This management allows us to control the impact of modifications and ensures long-term stability of the trained models.

Human expertise + advanced tools

We combine data expertise with cutting-edge technologies, using automated pipelines, specialized tools (Sustech, CVAT, CloudCompare, Label Studio, etc.), quality controls, and dashboards for fast, precise, and traceable curation powered by a human + software hybrid approach.

Large-scale capability

With over 400 experts and a scalable organization, Infoscribe handles very large volumes without compromising quality: managing peak workloads, short lead times, flexible capacity, multi-language and multi-format support, and industrial-grade project management—from POCs to large-scale deployments.

Built-in security and compliance

Every project is executed within a strict framework: ISO 27001-aligned processes, GDPR compliance with European hosting, fine-grained access control and full traceability, systematic data encryption, and regular audits—ensuring end-to-end protection of your sensitive information.

Industries / Sectors

SUPPORTED DATA & OUTPUT FORMATS

Text formats (OCR, transcription, NLP)

Input formats (source files)

- .pdf, .txt, .docx, .odt, .rtf

- Text images / scans : .jpg, .tiff, .png

- Document archives : .zip, .tar.gz

Annotation formats (output)

- JSON (structure NLP) : entities, relations, intents

- CoNLL / TSV / CSV (token-level annotations)

- Label Studio / Prodigy format

- Custom XML or CSV (depending on target model)

- ALTO XML / PAGE XML (structured OCR)

IMAGE FORMATS (2D)

Input Formats (Source Files)

- Standard Images .jpg, .jpeg, .png, .bmp, .tiff, .tif, .webp

- Archives / Project Structures .zip, .tar, .rar

- Camera / Drone Specific Formats: .raw, .cr2, .nef, .dng, .exr

Annotation Formats (Output)

- CVAT for images (.xml, .json)

- COCO (instances_train.json, .json)

- Pascal VOC (.xml)

- YOLOv5 / YOLOv8 (.txt)

- LabelMe (.json)(.txt)

- Label Studio (.json, .csv)

- SuperAnnotate (.json)

- VGG Image Annotator (VIA) (.json)

- Datumaro export (multi-format: COCO, VOC, YOLO, CVAT, etc.)

VIDEO FORMATS

Input Formats (Source Files)

- .mp4, .avi, .mov, .mkv, .flv, .wmv

- Image Sequences (frame_00001.png, etc.)

Annotation Formats (Output)

- CVAT for video (.xml ou .jsoncontenant frames + interpolations)

- COCO video/ MOT (.json)

- VID / ImageNetVID

- Datumaro (videoexport) –CVAT, COCO, YOLO compatible

3D FORMATS (POINT CLOUDS, LIDAR, PHOTOGRAMMETRY)

Input Formats (Source Files)

- .pcd (Point Cloud Data)

- .ply (Polygon File Format)

- .las / .laz (LiDAR standard)

- .xyz, .txt, .csv (X, Y, Z Coordinates)

- .e57 (scanner 3D)

- .obj, .stl, .fbx (3D Meshes)

- .zip (projets CloudCompare, Metashape, etc.)

Annotation Formats (Output)

- CVAT for 3D (.json, .pcd, .ply)

- KITTI (.txt, .bin, .xml)

- SemanticKITTI (.label, .bin)

- OpenLabel (.json)

- Datumaro 3D (multi-format : KITTI, OpenLabel, CVAT3D)

- Projets CloudCompare (.bin, .cc, .txt)

- Projets SUSTech (.jsonavec labels 3D)

MEDICAL IMAGE FORMATS

Input Formats (Source Files)

- DICOM (.dcm, DICOM folders)

- NIfTI (.nii, .nii.gz)

- NRRD (.nrrd)

- MHD / RAW (.mhd, .raw)

- Analyze (.hdr, .img)

- Medical TIFF (.tiff, .ome.tiff)

Annotation Formats (Output)

- 3D Slicer segmentation (.nrrd, .seg.nrrd)

- Segmented NIfTI (.nii)

- Mask PNG / overlay (. (.png + Correspondence Table .csv))

- CVAT for medical images (.xml, .json)

- Custom volumetricJSON (As per Client Requirements)

SATELLITE AND AERIAL DATA

Input Formats (Source Files)

- .tif, .tiff, .jp2 (GeoTIFF, Sentinel, orthophotos)

- .vrt, .shp, .geojson, .kml, .kmz

- Raster or Multi-Band Mosaics

Annotation Formats (Output)

- GeoJSON (Polygons, Points, Lines)

- Shapefile (.shp, .dbf, .prj)

- Georeferenced COCO / CVAT ((with added coordinates))

- Segmented Raster (.tif / .png with Colormap)

FAQ

Frequently Asked Questions

Data curation is crucial for improving the performance and robustness of artificial intelligence models, as it ensures that the data used for training, validation, and testing is clean, consistent, relevant, and representative of reality. In any AI project, the quality of the model depends directly on the quality of the dataset: even the most advanced algorithm will produce limited, biased, or unstable results if the data is incomplete, poorly structured, or noisy. Data curation specifically addresses these weaknesses by ensuring a rigorous selection of sources, systematic cleaning of anomalies, and consistent normalization of formats.

By consolidating, sorting, and enriching the data, we reduce biases and spurious variations that prevent models from learning reliable patterns. This step also allows the identification of duplicates, inconsistencies, missing values, input errors, or outdated data, which can significantly degrade performance and lead to overfitting or unpredictable behavior. Proper curation also reveals underrepresented areas in the dataset, enabling class rebalancing and reinforcing the diversity necessary for model generalization.

Data curation also plays a key role in traceability and versioning, which are essential in an MLOps workflow. By documenting the data’s provenance, the applied transformations, and the selection criteria, it ensures full transparency, necessary for audits, compliance, and continuous model improvement.

Finally, curation facilitates the reuse and evolution of the dataset over time. Well-structured, properly labeled, and thoroughly documented data allows for faster training of new models, testing of variants, evolution of pipelines without starting from scratch, and ensures long-term robustness, even in highly variable environments.

We ensure the reusability of curated data through a methodical and rigorous approach aimed at making datasets usable, traceable, well-documented, and compatible with all future use cases: annotation, model training, integration into MLOps pipelines, or deployment in other systems.

1. Data normalization and structuring

We apply standardization practices to ensure complete consistency across different sources. This includes:

- Harmonizing formats (CSV, JSON, Parquet, XML…),

- Standardizing field names and schemas,

- Normalizing values (units, structures, typologies).

This normalization ensures that the data can be understood and reused by any system, model, or analysis tool.

2. Comprehensive and relevant metadata production

We enrich each dataset with exhaustive metadata: data provenance, content description, applied transformations, field typologies, completeness rates, and applied business rules. This metadata facilitates understanding, handling, and, most importantly, integrating the dataset into existing pipelines.

3. Creation of data catalogs

Curated data is organized into structured catalogs to enable:

- Centralized management,

- Easy search,

- Clear version navigation,

- Visibility of all available resources.

These catalogs become a reliable source for Data, AI, business, and annotation teams.

4. Complete documentation and traceability (Data Lineage)

Our documentation precisely describes:

- All applied transformations,

- Cleaning rules,

- Tools used,

- Successive dataset versions,

- Limitations, exceptions, or special cases.

This traceability (“data lineage”) is essential for auditing, reproducing, or adapting the data in the future.

5. Interoperability with existing systems and pipelines

Curated data is prepared to integrate seamlessly with:

- Annotation platforms,

- AI training tools (TensorFlow, PyTorch, HuggingFace…),

- MLOps solutions (MLflow, DVC, ClearML…),

- Databases and data warehouses.

Our goal is to enable a smooth transition between stages: curation → annotation → training → production.

6. Preparation for future annotation and AI training phases

We structure the dataset to make future phases fast and efficient:

- Segmentation into coherent batches,

- Contextual enrichment,

- Versioning to facilitate updates,

- Formats compatible with 2D/3D or textual annotation tools,

- Alignment between raw data, curated data, and annotated data.

This proactive preparation significantly reduces costs and delays for future AI iterations.

Conclusion

Through this approach combining normalization, metadata, documentation, catalogs, and interoperability, we ensure that curated data is not only clean today but also fully reusable, scalable, and suited to the future needs of your AI projects.

Data curation is intended for all organizations that need to manage large volumes of data and require reliability, consistency, and traceability to support their data, AI, or business projects. It is particularly relevant for:

1. Companies developing AI and Machine Learning models

They need clean, standardized, and well-documented data to train robust models (vision, NLP, classification, prediction, etc.).

2. Organizations handling heterogeneous or multi-source data

Organizations dealing with dispersed, duplicated, redundant, or inconsistent data, such as:

- Data lakes,

- Customer histories,

- Product databases,

- Operational logs.

3. Regulated sectors

Data curation is essential to ensure compliance, auditability, and data quality in:

- Healthcare,

- Finance/insurance,

- Energy,

- Public sector,

- Legal.

4. Data, AI, BI, and MLOps teams

For these teams, curation facilitates:

- The creation of reliable datasets,

- Versioning,

- Documentation,

- Integration into automated pipelines.

5. Organizations aiming to industrialize their data processes

Companies that want to transform raw data into usable digital assets for:

- Business optimization,

- Automation,

- Decision-making,

- Data governance.

6. Companies preparing data for annotation

Whether for textual, 2D, 3D, or multimodal annotation, data curation ensures that data is ready, structured, and usable for subsequent phases.

In summary

Data curation is for any organization looking to transform raw data into reliable, structured, interoperable data, ready to be used in artificial intelligence, advanced analytics, or automation projects.

We evaluate the quality of a dataset after curation by systematically checking internal consistency, completeness of information, and compliance with the rules defined in the specifications. We analyze the presence of residual anomalies such as duplicates, missing values, format errors, or inconsistencies across different data sources. We also perform normalization checks to ensure that fields, units, and typologies follow a consistent structure throughout the dataset.

Next, we verify the representativeness of the data to ensure that no class, category, or typology is under- or over-represented, which could introduce bias in future annotation or model training phases. Each control step is documented to ensure full traceability, including data provenance and applied transformations.

Finally, we validate the operational quality of the dataset by testing it against the intended use cases: ingestion into an AI pipeline, preparation for annotation, integration into a business system, or analytical use. This series of validations ensures that curated data is not only clean and consistent but also truly usable in a production environment.