Computer vision has become one of the cornerstones of modern artificial intelligence. Thanks to advances in deep learning, machines are now capable of understanding the visual world with a level of accuracy that once belonged to science fiction: recognizing a face, detecting an object, analyzing a scene, understanding text within an image, segmenting a 3D environment, or interpreting videos in real time.

But behind this apparent technological magic lies a central element—often invisible yet absolutely essential: the quality of the data, annotations, and datasets used to train the models.

What Is Computer Vision ?

Computer vision is the field of AI that enables machines to perceive, understand, and interpret images or videos. Its goal is simple: to provide computer systems with visual analysis capabilities comparable to—or even superior to—those of humans

The Main Tasks of Computer Vision

Computer vision encompasses several specialized tasks:

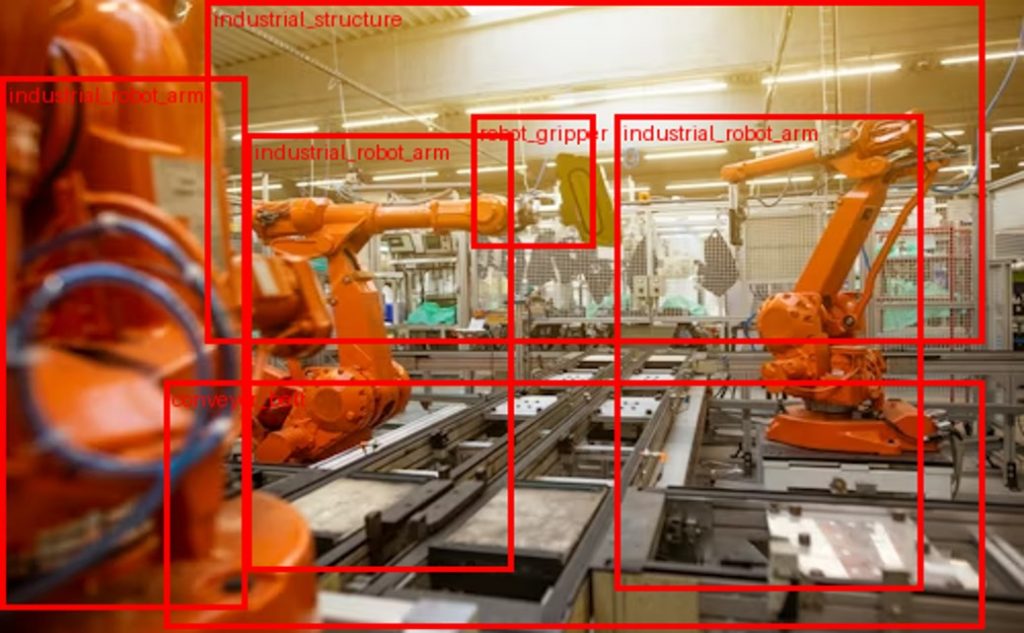

- Object detection: identifying and locating objects in an image

- Image classification: determining the category an image belongs to

- Segmentation: dividing and isolating each component of an image

- Text recognition (OCR): extracting textual information

- Scene analysis: understanding the overall environment

- Object tracking: following an entity in a video

- 3D vision: analysis using LiDAR, point clouds, and stereo cameras

The Role of Deep Learning in Modern Computer Vision

Fifteen years ago, computer vision relied on handcrafted approaches: edge detection, color histograms, SIFT and HOG descriptors. The arrival of deep learning completely transformed the field. Today, convolutional neural networks (CNNs), vision transformers, self-supervised models, and multimodal architectures achieve performance far superior to traditional approaches

Major Families of Deep Learning Models in Vision

- CNNs (Convolutional Neural Networks): the pioneers of the revolution (AlexNet, VGG, ResNet…)

- U-Net and derivatives: models specialized in image segmentation (medical, industrial)

- YOLO, SSD, Faster R-CNN: models optimized for real-time detection

- Vision Transformers (ViT): a new generation inspired by NLP, offering exceptional performance

- SAM – Segment Anything Model: a general-purpose automatic segmentation model designed for large-scale annotation

With these models, even the most complex tasks become accessible—provided that the dataset is properly prepared, annotated, and segmented

Datasets Are the Heart of Computer Vision

A deep learning model learns only from data.

The quality of a dataset directly determines:

- model accuracy

- generalization capability

- robustness to variations

- production performance

- level of bias or error

The world’s largest models (GPT-4o, SAM, CLIP…) were trained on datasets containing billions of annotations.

What Makes a Good Vision Dataset ?

A high-performance dataset must be:

- diverse (lighting, angles, environments)

- representative (covering all real-world cases)

- precise (consistent annotation across annotators)

- balanced (no dominant class)

- sufficiently large

- well documented (clear labels, guidelines, classes)

The Importance of Annotation in Computer Vision

Annotation consists of describing, labeling, or structuring images and videos.

It is a critical—and often underestimated—step that directly conditions model performance.

Types of Annotation in Vision

Bounding boxes

- Polygons

- Precise contours

- Semantic segmentation

- Instance segmentation

- 3D cuboids

- Keypoints / landmarks

- Human skeletons

- Image classification

Why Annotation Is Difficult

Annotation must be:

- precise

- fast

- consistent across annotators

- faithful to guidelines

- adapted to the domain (medical, automotive, industrial…)

Poor labeling = an unusable model

Segmentation: A Fundamental Pillar

Segmentation is one of the most important tasks in computer vision. It allows each object or region of interest to be separated, providing pixel-level understanding.

Semantic Segmentation

Each pixel is assigned a class.

Example: road, car, tree, sky.

Instance Segmentation

Each distinct object receives its own identifier.

Example: car #1, car #2…

Panoptic Segmentation

A combination of the two, enabling complete scene understanding.

Why It’s Crucial

Segmentation improves:

- safety (automotive, industrial safety)

- detection accuracy

- 3D understanding

- synthetic scene generation

- digital twins (Industry 4.0)

Industries Using Computer Vision and Deep Learning

Computer vision is transforming entire industries:

Automotive & Mobility

- Autonomous driving

- Obstacle detection

- Lane analysis

Industry & Logistics

- Quality control

- Defect detection

- Robotic vision

Healthcare & Medical

- Medical image analysis

- Anatomical segmentation (tumors, organs)

- Retail & E-commerce

- Product recognition

- In-store traffic analysis

Defense & Security

- Intrusion detection

- Intelligent video surveillance

How to Train a Deep Learning Vision Model ?

A typical pipeline includes:

Dataset collection

- Data curation

- Annotation & segmentation

- Sample preparation

- Model training

- Evaluation (mAP, IoU, F1, Recall…)

- Optimization

- Deployment within an MLOps pipeline

Future Trends in Computer Vision

Future trends in computer vision point toward a spectacular evolution toward smarter, more autonomous models capable of understanding the visual world with unprecedented depth. One major advancement lies in vision transformers, which are gradually surpassing traditional CNN architectures thanks to superior global relationship modeling within images. At the same time, multimodal vision-language fusion is enabling systems to interpret scenes and describe or explain them in natural language, as seen in Vision-Language Models (VLMs).

The rise of self-supervised AI is another major breakthrough, allowing models to learn from vast quantities of unlabeled images and reducing dependence on costly annotated datasets. Generative models—especially diffusion models—are transforming how realistic images are created, how datasets are augmented, and how rare scenarios are simulated.

Universal segmentation, driven by models such as SAM (Segment Anything Model), aims to provide tools capable of segmenting any object without task-specific training. Finally, combining synthetic data with real-world data enables the creation of richer, more diverse datasets better suited to complex environments.

The future of computer vision will be hybrid, multimodal, and self-learning.

Conclusion

Computer vision and deep learning now occupy a central role in global digital transformation. These technologies are no longer seen as simple innovations, but as strategic levers capable of redefining industrial processes, improving decision-making, automating complex tasks, and creating new user experiences. Yet despite the growing power of AI models and the emergence of ever more advanced architectures, no system can deliver reliable results without a solid foundation: the quality of the data that fuels its learning.

The first indispensable pillar is the construction of a high-quality dataset. A computer vision model learns only from the examples it is given; if the data is poorly selected, unbalanced, noisy, or insufficiently representative of the real world, even the best model will fail to deliver satisfactory performance. A rich, diverse, and well-structured dataset is therefore the bedrock of any robust visual solution.

Annotation plays an equally decisive role. It precisely defines what the model must learn: identifying an object, delineating a region, understanding a scene, distinguishing classes, or extracting key elements. Rigorous annotation—consistent across annotators and aligned with clear guidelines—avoids ambiguities and errors that would undermine model effectiveness. This often long and demanding step directly determines the quality of supervised learning.

Segmentation, whether semantic, instance, or panoptic, is a third essential factor. It provides pixel-level understanding of images, enabling deep learning systems to better analyze visual environments, detect fine or complex objects, and achieve higher precision in critical contexts such as robotics, healthcare, or automotive applications. Precise segmentation enhances not only model performance, but also robustness to real-world variability.

Finally, intelligent data management is essential to ensure the longevity and scalability of AI projects. This includes curation, cleaning, versioning, documentation, governance, and continuous data preparation for new training phases. A well-defined strategy ensures that models remain relevant over time, can be retrained efficiently, and integrate smoothly into modern MLOps pipelines.

Companies capable of industrializing these steps—collection, curation, annotation, segmentation, and dataset management—will gain a significant competitive advantage in all sectors leveraging computer vision. They will be better equipped to develop high-performance, scalable solutions adapted to real-world challenges, while reducing costs, risks, and time to production. In a world where visual data is growing exponentially, mastering the entire data value chain becomes a decisive competitive advantage in shaping the future of visual artificial intelligence.