Text Data Annotation Service for AI

Transform your texts into actionable knowledge for artificial intelligence.

Home > Text Data Annotation for AI

Give meaning to your data

Text data annotation is a strategic step in building artificial intelligence systems capable of understanding, interpreting, and generating natural language. It involves structuring, enriching, and labeling textual content to enable a machine learning model to learn how to recognize entities, relationships, intents, or emotions.

Custom NLP datasets

At Infoscribe, we combine linguistic expertise, data engineering, and state-of-the-art annotation tools to produce high-quality textual corpora. Our teams design, annotate, and validate multilingual datasets intended for a wide range of applications, including NLP, chatbots, search engines, competitive intelligence, cybersecurity, legal intelligence, and digital health.

Structuring text to better train AI

Text annotation makes it possible to identify entities, concepts, and relationships, to build consistent training datasets for supervised learning, and to improve the performance and robustness of language models. It also enhances their explainability through standardized and traceable annotations, while ensuring the quality and compliance of corpora in regulated environments.

EXpertise

Infoscribe’s text annotation methodology

Our projects follow a scientific approach, driven by quality and security, ensuring reliable and fully controlled processes at every stage.

Each project begins with a scoping workshop with the client’s teams:

- AI model objectives (classification, extraction, sentiment analysis, summarization, etc.);

- Volume, diversity, and sources of textual data;

- Languages and usage contexts (legal, technical, medical, marketing, etc.);

- Desired levels of granularity and annotation schemes.

A detailed annotation guide is then developed, including label definitions, positive/negative examples, edge cases, orthographic conventions, and handling of linguistic ambiguities

Raw texts are:

- Cleaned (removal of noise, duplicates, extraneous tags, and errors);

- Normalized (encoding, segmentation, linguistic harmonization);

- Anonymized (removal of personal data in compliance with GDPR);

- Segmented into relevant units (sentence, paragraph, document).

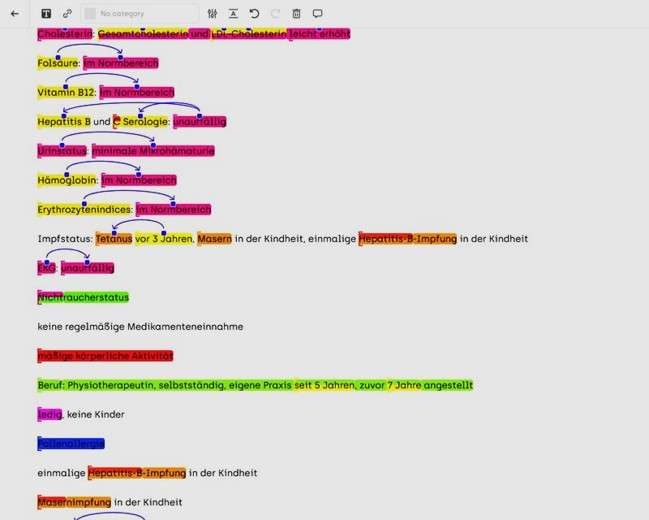

We use professional tools (Label Studio, Prodigy, Inception, LightTag, or our in-house tools) combining AI and human expertise:

- Automatic pre-annotation using language models (BERT, spaCy, GPT, etc.);

- Human review for validation and adjustment;

- Inter-annotator consistency measurement (Cohen’s Kappa, F1 score);

- Iterative loops to refine guidelines and stabilize labels.

Each dataset undergoes an audit for consistency, completeness, and reliability:

- Verification of syntactic and semantic consistency;

- Random checks by an expert reviewer;

- Calculation of quality metrics (critical error rate, inter-annotator agreement, class coverage);

- Generation of a comprehensive quality report prior to delivery.

Security and compliance

At Infoscribe, the security of textual data is non-negotiable. Our infrastructures are ISO 27001 certified and GDPR compliant. We implement:

- Data encryption (AES-256, SSL/TLS);

- Multi-factor authentication and environment segmentation;

- Comprehensive access logging and auditing;

- Project- and role-based isolation;

- Sector-specific compliance (banking, healthcare, defense, etc.).

Each project benefits from full traceability: metadata, annotation history, audit logs, and sampling-based verification.

TYPES OF TEXT ANNOTATIONS HANDLED

Semantic annotation

Adding labels and metadata to capture the meaning of texts : Thematic categorization (economics, health, politics, technology, etc.); Annotation of key concepts and meaningful words; Intent tagging in queries or dialogues

Named Entity Recognition (NER)

Identification of specific entities: People, organizations, products, locations, dates, currencies, technical references; Hierarchical and contextual entity schemas; Used for monitoring, intelligence, compliance, or information retrieval.

Text classification

Assigning one or more categories to a text: Thematic, emotional, functional, or regulatory; Used for moderation, customer segmentation, or incident analysis.

Sentiment and opinion analysis

Detection of tone (positive, negative, neutral) and emotional intensity: Analysis of customer feedback, social media, product reviews; Supports e-reputation models or user experience evaluation.

Highlighting links between entities:

Hierarchical, temporal, or causal relationships; Identification of interactions (e.g., “Company X acquires Company Y”).

Syntactic and morphological annotation

Segmenting text according to its linguistic structure: Tokens, lemmas, syntactic dependencies; POS (Part-of-Speech) and language grammar annotation.

Coreference & linking

Linking entities mentioned in different forms: “The president,” “Mr. Dupont” → same entity; Essential for comprehension and summarization models.

Industries / Sectors

SUPPORTED DATA & OUTPUT FORMATS

Text formats (OCR, transcription, NLP)

Input formats (source files)

- .pdf, .txt, .docx, .odt, .rtf

- Text images / scans : .jpg, .tiff, .png

- Document archives : .zip, .tar.gz

Annotation formats (output)

- JSON (structure NLP) : entities, relations, intents

- CoNLL / TSV / CSV (token-level annotations)

- Label Studio / Prodigy format

- Custom XML or CSV (depending on target model)

- ALTO XML / PAGE XML (structured OCR)

- Custom output formats (set up by our team

FAQ

Frequently Asked Questions

We offer a wide range of text annotations tailored to the needs of AI, NLP, and document processing projects. Our teams specifically perform:

- Named Entity Recognition (NER): precise extraction of people, locations, organizations, dates, amounts, products, and other client-defined entities.

- Text classification: automatic categorization of documents, sentences, or passages based on themes, intents, or priority levels.

- Information Extraction (IE): identification and structuring of key elements in text, such as attributes, values, statuses, relationships, or business fields.

- Semantic annotation: enriching text with tags, conceptual links, or metadata to facilitate analysis or NLP model training.

- Sentiment and tone analysis: emotional or qualitative annotation (positive, neutral, negative, subjective, objective, etc.).

- Text segmentation: dividing documents or conversations into logical sections (chapters, sentences, intents, speaker turns, etc.).

- Conversational annotation: identification of roles (agent/client), detection of intents, keywords, questions, answers, and emotions in dialogues.

- Anonymization and pseudonymization: masking personal or sensitive data according to specific GDPR rules.

- Annotation on complex documents (PDFs, scans, OCR): enriched text extraction, page classification, content hierarchy, multimodal annotation (text + structure).

Depending on your needs, we also create custom annotation schemas tailored to your domain (legal, medical, insurance, retail, finance, industrial).

We operate across a wide range of industries, each with specific text annotation needs to support artificial intelligence, natural language processing, and document automation projects. Thanks to our expertise and flexible workflows, we assist both technology companies and organizations with complex regulatory or business requirements.

In the medical sector, we perform clinical entity extraction, annotation of medical reports, structuring of patient records, and anonymization of sensitive information. In insurance, we annotate claims, customer interactions, contracts, and loss reports to improve automatic understanding and decision-making. In finance, we handle regulatory documents, banking transactions, reports, and fraud typologies to train specialized NLP models.

We also work in the legal sector for contract analysis, clause extraction, document classification, and identification of critical elements. For retail and e-commerce, we annotate customer reviews, product descriptions, catalogs, and conversations to enhance recommendation systems and automated customer support. In customer service, we process tickets, chats, emails, and voice transcriptions to strengthen intent analysis, sentiment detection, and service quality models.

The most requested service types include Named Entity Recognition (NER), text classification, information extraction, sentiment analysis, text segmentation, conversational annotation, and GDPR-compliant anonymization. We also provide custom annotation schemas tailored to each client’s business requirements and AI models.

Thanks to this versatility, we deliver proven solutions for companies looking to optimize the quality of their textual data and deploy high-performing, robust NLP systems.

We ensure the quality of text annotations through a rigorous methodology designed to deliver reliable, consistent, and directly usable data for NLP models. Our process begins with the creation of detailed guidelines, developed in collaboration with our clients, to clearly define annotation rules, ambiguous examples, edge cases, and the expected level of granularity. These guidelines serve as a reference throughout the project and ensure uniform interpretation, even when multiple teams work simultaneously on large volumes of data.

Next, we deploy specialized annotators, trained in advanced linguistic tasks such as Named Entity Recognition (NER), information extraction, sentiment analysis, or conversational annotation. For demanding domains—medical, legal, finance, insurance—we select experienced profiles and conduct calibration sessions to ensure precise understanding of domain-specific vocabulary and associated requirements.

Our approach includes multiple levels of quality control. Each annotation batch is reviewed through manual checks, sample audits, inter-annotator comparisons, and, where applicable, internal consistency metrics. Divergences, missing labels, semantic inconsistencies, and interpretation errors are detected and corrected. This multi-step system guarantees consistent accuracy across the entire dataset, regardless of the number of annotators involved.

For large-scale projects, we also integrate tracking, versioning, and automated quality control tools, enabling rapid detection of anomalies, quality variations, and deviations from guidelines. These tools provide full traceability: every annotation can be located, verified, and corrected if needed.

By combining linguistic expertise, industrialized processes, and systematic quality control, we deliver reliable, consistent text annotations perfectly suited for training high-performing AI models.

We determine the cost of a text annotation project based on several factors: the volume of documents to be processed, the complexity of the tasks (NER, extraction, classification, anonymization, etc.), the required level of expertise, the languages involved, and the quality control or review requirements.

We also take into account the provided formats, the quality of the source data, any preprocessing needs, and the expected delivery speed. Based on this analysis, we offer a transparent and tailored pricing, optimizing costs while ensuring a level of quality that meets the project’s requirements.